Okay, maybe not really, but the story is as follows. While I was studying for the final exam from the Automatic Control Theory I, I was wondering which letter(s) I will obtain. This crucial question bothers every student (sometimes is the question only “will I pass or not?”). I wanted to predict probabilities of getting A, B, … or Fx. Not the general probabilities, but my own. Isn’t that a classification problem? Sure, let’s the neural networks deal with that.

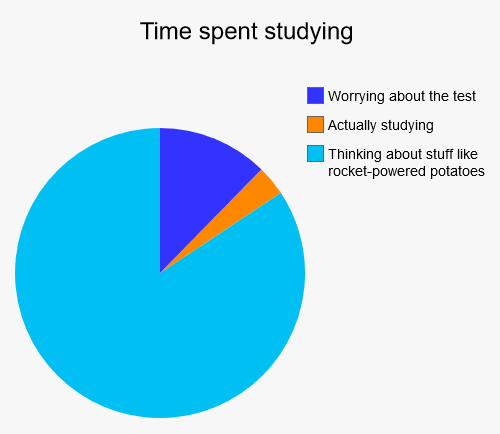

Over 3 years I have gone through many exams from various courses and with diverse results. In most cases grades depend on the course difficulty, but also on several other features like personal interest. The grade also should be related to the time spent with studying. I hope that the final grades aren’t just a sheer luck, then they would be pointless. So, I want to create a neural network, which will find a pattern between the course features and obtained grades. It should reflect my own ability to take the exams. As the training data, I can use all my previous results. Then the trained model can be used to predicting future grades. If it’ll work, I will know my final grade even before the real exam! Student’s dream.

At first, I have to prepare the data. I tried to choose all attributes, which can influence the final grade. The most important attribute is course attainment evaluation from the AIS – statistics from previous years. I added some course characteristics like compulsory, number of credits, semester and others. Then I estimated each course on a scale from 0 to 10 how much I like it, how difficult I found it or how my attendance on lectures was. I put together 20 attributes which might matter. One example looks like this:[1]

Proces_Control = [1.0 27.6 20.7 20.0 16.5 10.7 4.5 1 6 4 0 0.0 ...

0 0 1 2.0 4 8 8 3 10]I trained the neural network as before, this time I have 54 samples. I hope it’s enough, because I don’t have any more. The first result was:

| A | B | C | D | E | Fx |

| 61 % | 4 % | 21 % | 7 % | 7 % | 0 % |

Good! At least I cannot fail. I trained the neural network again. The probability of getting A even increased. Great! I trained the neural network once more, the data are still same.

| A | B | C | D | E | Fx |

| 1 % | 37 % | 58 % | 3 % | 1 % | 0 % |

Eeeh? Not only this worsened my performance and my hope disappeared, but it also suggests that the neural network isn’t the ultimate tool. I am not sure if I can rely on this model, which is slightly inconsistent with itself. The next problem is that the probability of getting Fx is always 0 %, because the neural network doesn’t even know this grade. Or I can omit this problem by pronouncing that the neural network just finds my superpower of repelling Fx :D. Further, I was curious what machine learning classifiers would predict. I also didn’t forget to optimize them. They showed me this:

| Mode | Clasification tree | k-nearest neighbors | Naive Bayes | Multiclass SVM | Discriminant analysis |

| without optimization | B | C | A | C | C |

| with optimization | A | A | A | A | C |

I don’t like the discriminant analysis anymore. I want to pretend that last time it wasn’t the most reliable classifier. It looks like the optimization doesn’t improve only the classification models, but also my performance (except DA). Maybe for this kind of problem would be better to use a regression instead of a classification, grades can be seen as numbers. Results are as follows.

| Regression tree | SVM regression | Gaussian process regression | Multiple linear regression | Linear regression |

| A (1.00) | B (1.48) | B (1.44) | B (1.46) | A (1.05) |

The question is, which one can I trust? I can test the accuracy of the models as many times before by putting 30 % samples aside and use them for validation. However, the set of samples isn’t big and therefore results aren’t very accurate. The reliability of the models didn’t show up too overwhelming. Best ones – optimized classifiers – perform around 70 % reliability. I can assume that a part of the grade is made by the luck and the randomness.

So, if optimized classifiers are the best and they are telling me that I will get an A, can I rely on them? Can I go to the exam with a feeling that I can’t fail as my models suggest? I wish I can. Now you might be curious how it ended. B, that’s my final grade. Now I am not sure if I should be happy or disappointed with the B, because it means that my classification models aren’t perfect. Or they are, but maybe I should have studied more before the exam[2] instead of trying to predict a grade using the artificial intelligence tools :D. While typing data by hand for hours, I was asking myself if I shouldn’t be rather learning the control theory right now.

Nevertheless, the predictions were close and some of them were right. If I look at the results again, not only to one, but as a whole, I clearly see they suggest that a grade will be between A and C. It works! I want to naively believe it was not a coincidence. One more exam is ahead of me. This time all models agreed it will be the A (except DA, again). They are even more certain than me. I will see. (Edit: my classifiers did not betray me.)

The fun fact at the end: it is suspicious that the prediction of certain models isn’t influenced by the amount of studying time. However, according to the k-NN model (which firstly said C) if I increase the number of studying days to 26, the prediction improves to B and if I want to get the A grade, I have to study for 860 days! No thanks, B is fine.

1. The attributes are as follows: [grade – this will be used as a Y label, A (percentage of students, which got an A in previous years), B, C, D, E, Fx, level of study (1 = bachelor; 2 = master), semester, credits, completion (0 = examination; 1 = classified), form (0 = written; 1 = oral; 0.5 = both), compulsory (0 = compulsory; 1 = optional), cheating (0 = not allowed; 1 = somehow allowed), attempt (1-3), days of study before an exam, difficulty (0-10), interest (0-10), math character (0-10), preparation during a semester (0-10), attendance (0-10)] ↩

2. Nah, I just break the rule: don’t think on that one question you don’t want to pick. ↩